According to Moore Law, technological progress is exponential. This means that computational power of computers is doubled in a fixed period of time. How long exactly do you have to wait for it to be doubled? Estimation says that it takes around two years. Currently, computational power of the fastest computer in the world is more than 34 petaflops, which means that it can compute over million of billions number operations in only one second. These shocking results change the cognitive perspective and allow to making more and more complicated calculations. Things that appear impossible before, are now within easy reach.

Thanks to that incredible technological development, many researchers turned to analyse the most complicated known systems. Example of such a system is the human brain which is still ahead of any machine created by man. It is estimated however, that supercomputers will achieve computational power of human brain in around 10 to 15 years. Nonetheless, no one has the intention to wait and do nothing. There are a lot of ongoing projects that focus on how does the brain operates. It is after all the reason why we have dominated the whole planet.

On the other hand, technological growth also means growing number of collected and stored data. Information flow around the world is huge. There are around billion web pages in the internet and the number of users is estimated as more than 3 billions. From almost every place in the world, we are able to check the stock exchange quotations at the other end of the world. We can also easily read one of 5 millions articles from english wikipedia. Yet, except the public data, there are a lot of informations and data bases which belongs to companies and institutions. This explains why we can observe lately the increasing interest in skills connected with computational analysis of huge amounts of data. So called Data Scientist is currently the most wanted position on the labour market.

Thanks to that incredible technological development, many researchers turned to analyse the most complicated known systems. Example of such a system is the human brain which is still ahead of any machine created by man. It is estimated however, that supercomputers will achieve computational power of human brain in around 10 to 15 years. Nonetheless, no one has the intention to wait and do nothing. There are a lot of ongoing projects that focus on how does the brain operates. It is after all the reason why we have dominated the whole planet.

On the other hand, technological growth also means growing number of collected and stored data. Information flow around the world is huge. There are around billion web pages in the internet and the number of users is estimated as more than 3 billions. From almost every place in the world, we are able to check the stock exchange quotations at the other end of the world. We can also easily read one of 5 millions articles from english wikipedia. Yet, except the public data, there are a lot of informations and data bases which belongs to companies and institutions. This explains why we can observe lately the increasing interest in skills connected with computational analysis of huge amounts of data. So called Data Scientist is currently the most wanted position on the labour market.

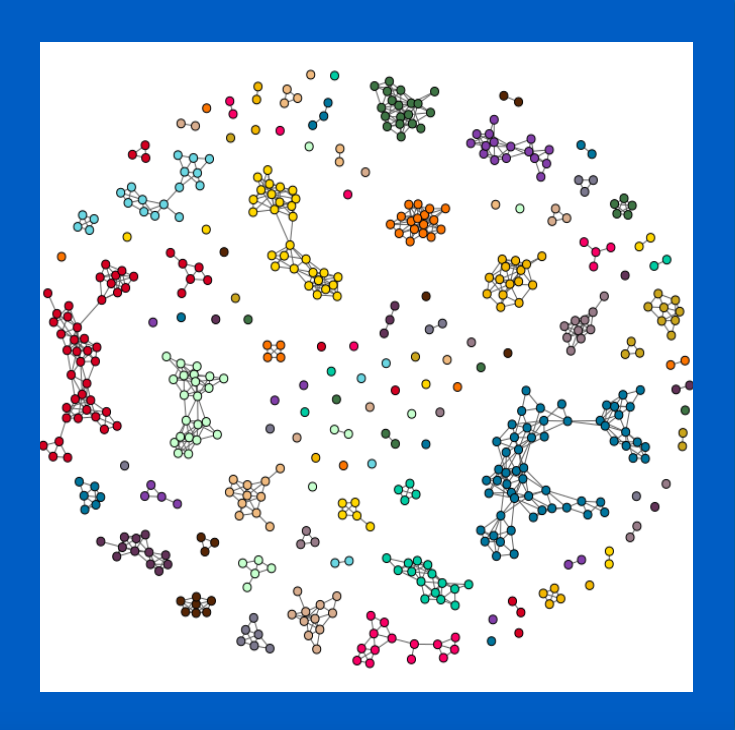

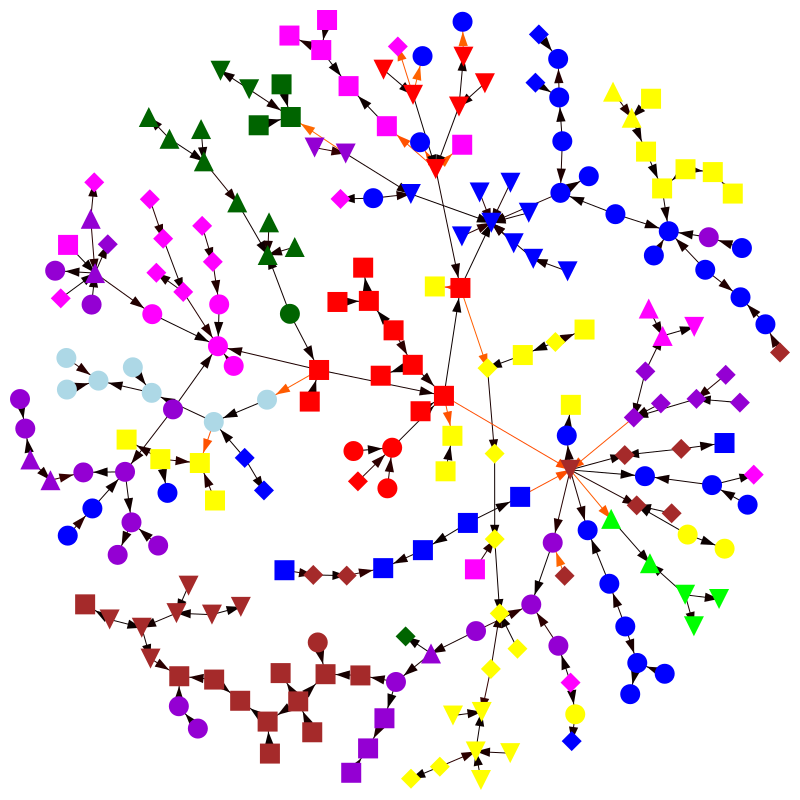

Both described aboved perspectives have one common feature. The need of simplification and understanding of a problem which level of complication is too high to look at it overall. It is connected with the issue of so called complexity. It was mentioned lately by Stephen Hawking that complexity will be the biggest challenge for all modern scientists. This approach underlies also the proposed project. In fact, the goal of the project is to propose and develop an algorithm which allow simplifying the complicated structures, described with networks and graphs. Why networks? It turned out recently that network approach is the most succesive in describing and analysing complex systems. The number of papers dedicated to so called complex networks, increases even faster than mentioned before, computational power of the fastest computers. A good illustration of this situation is the dynamics of citations of a classical paper about random networks. The paper was wrote by the famous matematician Paul Erdos. Before year 2000, which was a crucial year in the field of networks and their applications, the paper was cited on average 10 to 20 times a year. However, only in 2005, the paper was cited more than 150 times. Since that time, the networks were used for analysis in many different fields and for variety of problems. Starting with mentioned above brain, through statistical physics, telecomunications infrastructure, transport, disease spreading, prevention of terrorism, or even finance.

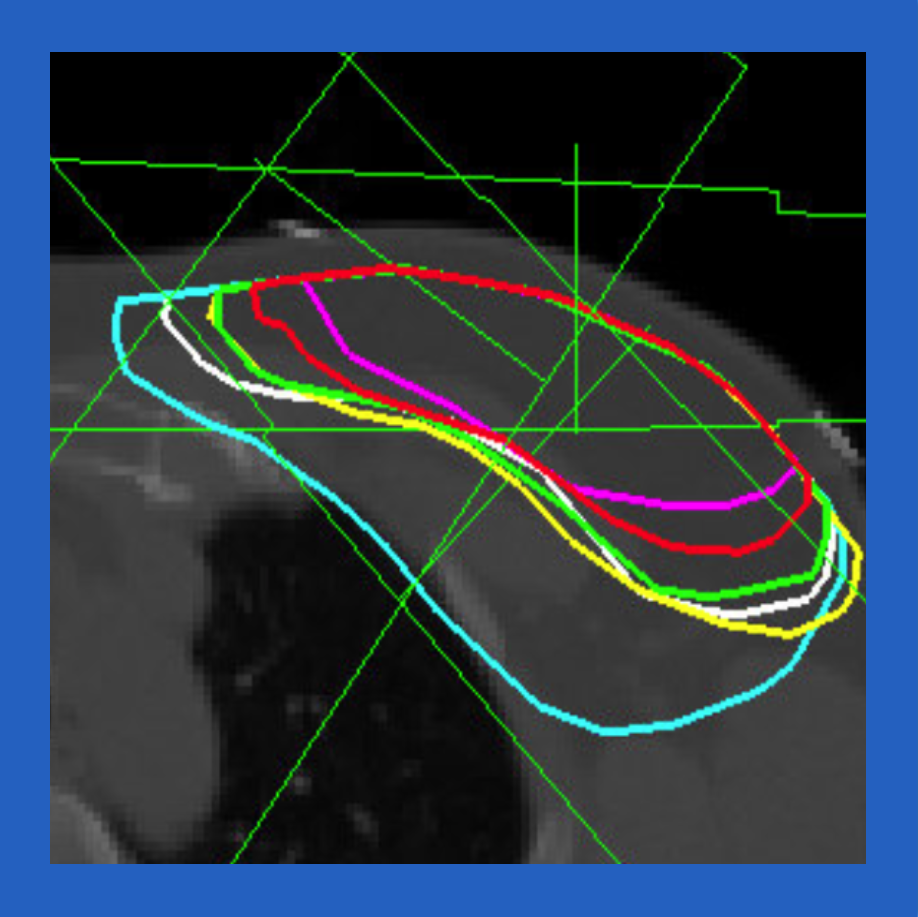

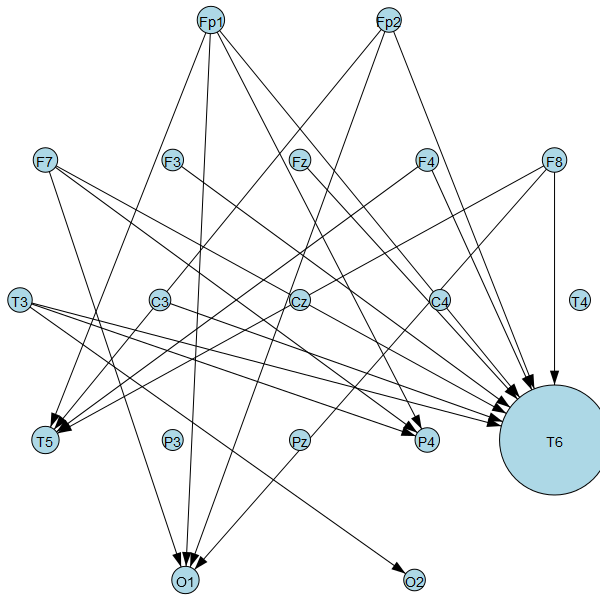

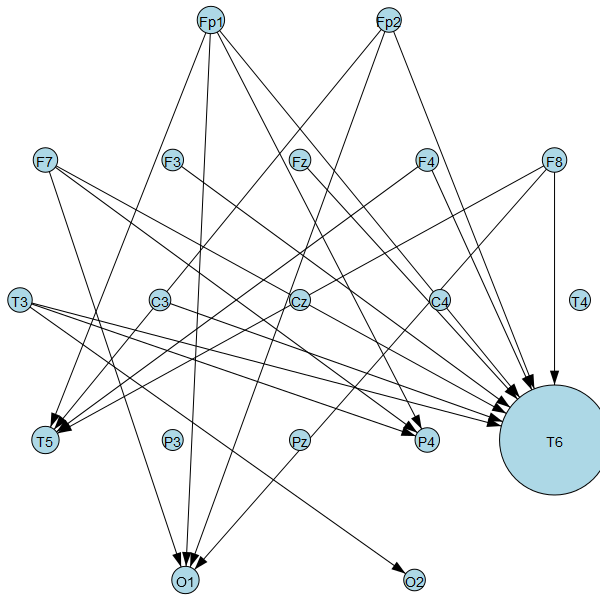

Apart from the algorithm mentioned earlier, the project also assumes using available data. Thanks to our collegues from different divisions among Faculty of Physics at University of Warsaw, we will be able to use the algorithm with data such as EEG brain signal, seismic data or financial quotations. We truly believe that only interdisciplinary approach can really confirm the effectiveness of proposed algorithm.